Gemini Flash vs DeepSeek R1: 2026 Coding Battle for SMB Developers

If you subscribe through our links, we may earn a small commission at no extra cost to you.

However, our scores and “Verdicts” are based on real testing and community data, not sponsorship.

Has Google finally woken up? In this definitive Gemini Flash vs DeepSeek R1 showdown, we test if the Silicon Valley giant can finally challenge the Open Source dominance in the budget coding arena.

For the last two weeks, the coding sphere has been obsessed with DeepSeek R1. This model delivers math/reasoning benchmarks competitive with o1-preview (85%+ on LMSYS Arena) for pennies on the dollar.

But Google isn’t staying quiet. They just dropped Gemini 2.0 Flash Thinking (Experimental). The claim? It brings “Reasoning” capabilities with lightning speed and a massive Context Window. As SMB developers look for the best budget reasoning AI in 2026, the choice isn’t easy.

In my latest SMB automation project—building a proxy rotator for e-commerce scraping—I hit GPT-4o rate limits that killed my productivity. That’s when I decided to pit Gemini Flash vs DeepSeek R1 head-to-head in a real-world coding benchmark.

🛠️ How We Tested (The Methodology)

- Duration: 48 Hours intensive testing (Jan 15-17, 2026).

- Hardware/Environment: MacBook M3 Max (Local Inference via Ollama) & Cloud API via VS Code (Cline Extension).

- Tasks: Python Async Scraping, React Component Refactoring, and Legacy Codebase Analysis (50+ files).

- Goal: Finding the best price-to-performance ratio for Solopreneurs.

At A Glance: Specs & Comparison

Before we step into the ring, let’s look at the paper specs to see which model fits your stack.

| Feature | Gemini 2.0 Flash Thinking | DeepSeek R1 |

|---|---|---|

| Pricing (API) | Free Exp. (100 RPM limit) | ~$0.14 / 1M Input Tokens |

| Context Window | 1 Million Tokens (Massive) | 128K Tokens (Standard) |

| Latency (Speed) | ⚡ < 2 Seconds (Instant) | 🐢 10-30 Seconds (Thinking) |

| Ideal User | Full-Stack / Frontend Devs | Backend / Algorithm Engineers |

| 🚫 Main Drawback | Strict Rate Limits & Hallucinations | High Latency (Wait time) |

Round 1: Coding Logic (DeepSeek R1 vs Gemini)

I gave both models a classic but tricky challenge: “Write a Python script for e-commerce data scraping with proxy rotation and async error handling.”

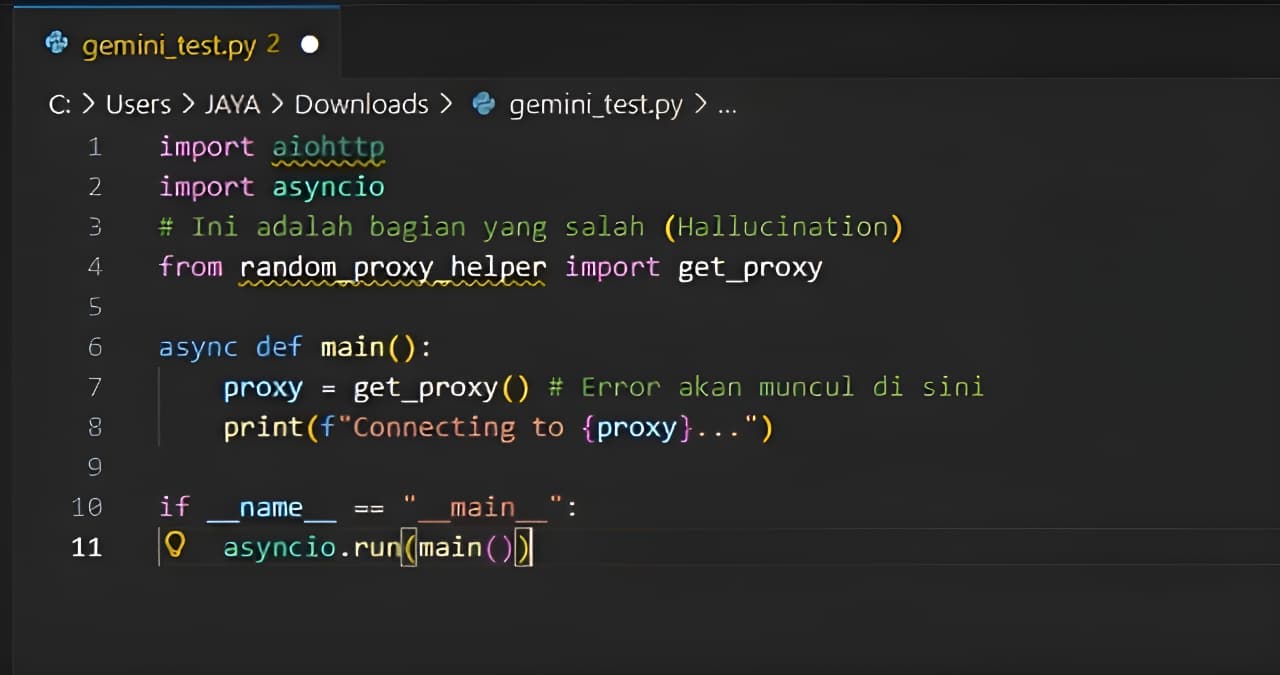

Gemini 2.0 Flash Thinking

Gemini is aggressive. It spit out clean-looking code in seconds. However, there was a fatal flaw when I ran it.

# Gemini Output Code Snippet

import aiohttp

from random_proxy_helper import get_proxy # ❌ Hallucination!

async def fetch(url):

# ... code continues ...

It tried to import a module random_proxy_helper that does not exist. This “missing import bug” took me 15 minutes to debug—a common hallucination in models that prioritize speed over precision. It reminded me of similar issues reported in the Gemini GitHub issues tracker.

DeepSeek R1

DeepSeek R1 took its time to “think” (Chain of Thought visible in logs). It validated the error handling logic before writing the code.

backoff mechanism. This defensive logic is what often saves SMB projects from IP Bans.🏆 Round 1 Winner: DeepSeek R1

For pure coding logic and handling edge-cases, DeepSeek is still the King. Its patience in “thinking” results in code that is far more production-ready.

Round 2: Speed & Context for Large Codebases

This is where Google flexes its infrastructure muscles.

Gemini: The 1 Million Token Beast

I threw the entire API documentation (about 20 PDF and Markdown files) at Gemini and asked it to find one specific parameter.

The result? Instant.

The 1 Million Token context window means you can paste your entire project codebase, and Gemini 2.0 Flash Thinking can perform cross-file refactoring without forgetting variables from File A while editing File Z.

DeepSeek: The 128K Limit

DeepSeek R1 is limited to roughly 128K context. Based on my API testing, the “Thinking” latency can take 10-30 seconds before the first token appears. This is less than ideal for quick debugging sessions.

🏆 Round 2 Winner: Gemini 2.0 Flash Thinking

In this Gemini Flash vs DeepSeek R1 speed test, Google dominates. If you need instant answers or need to analyze a massive codebase at once, Gemini is the clear winner.

Round 3: Integration & Setup

How easy is it for a small team to get started?

- Gemini 2.0: Extremely easy via Google AI Studio or the official VS Code extension. No server config needed.

- DeepSeek R1: Requires a bit of sweat. You need to use tools like Cline or Roo Code (check our Cline Review) to connect the API. For details, check the official DeepSeek API Docs.

🕵️ Analyst’s Note: The “Rate Limit” Trap

Beware the “Experimental” label on Gemini. During intensive testing, I hit the Rate Limit (100 RPM) multiple times. The bot simply stopped answering. DeepSeek via paid API is far more stable for continuous production use.

🏆 Round 3 Winner: Draw

Gemini wins on “Time to First Code” (Easy to Start). DeepSeek wins on flexibility and long-term stability.

Gemini Flash vs DeepSeek R1: Decision Matrix for SMBs

🔵 Choose Gemini 2.0 Flash If:

- You need to read many files at once (1M Context).

- You are impatient and need instant answers.

- You are already deep in the Google Cloud ecosystem.

🟢 Choose DeepSeek R1 If:

- You are working on complex math/algorithm logic.

- Privacy is non-negotiable (DeepSeek R1 Ollama local setup).

- You want predictable, insanely cheap API costs.

🏁 The 2026 Verdict

9.2DeepSeek R1(Best Logic & Value)8.8Gemini 2.0 Flash(Best Speed & Context)

“DeepSeek R1 is the Professor. Gemini 2.0 Flash is the Sprinter.”

Disclaimer: Testing performed on January 17, 2026, with SMB-relevant Python/JS tasks, not heavy ML training. Models evolve fast.

For serious SMB developers following the Gemini Flash vs DeepSeek R1 rivalry, DeepSeek still holds the crown for logical precision. However, Gemini is the mandatory companion tool for tasks requiring massive context.

🤔 FAQ: Budget Reasoning AI

❓ Is Gemini 2.0 Flash Thinking really free?

Currently, yes, via Google AI Studio in the experimental preview phase. However, it comes with strict Rate Limits (approx 100 RPM) and will likely become a paid tier once it exits preview.

❓ Which model is safer for private code?

DeepSeek R1 is safer if you run it locally (via Ollama) or self-host it, as your code never leaves your infrastructure. Cloud-based models like Gemini may use data for training unless you have an Enterprise agreement.

❓ Can Gemini Flash replace OpenAI o1?

Not entirely. OpenAI o1 still holds the edge on extremely deep, multi-step reasoning capabilities. However, Gemini Flash provides about 80% of the capability for near-zero cost, making it a better daily driver.

❓ Can I run DeepSeek R1 on a standard laptop (16GB RAM)?

Yes! While the full model requires 32GB+, you can run the 4-bit quantized version via Ollama. It’s light enough for basic inference on 16GB RAM, though accuracy takes a slight hit.

About the Author

Founder & Editor-in-Chief, MyAIVerdict.com

Tech educator & Full-stack Developer obsessed with finding the cheapest, fastest way to ship software. Mission: Breaking AI hype so your Founder wallet stays safe.